What you need to know about Responsible AI in recruitment and HR

Rapidly developing developments in the field of Artificial Intelligence have opened up many new opportunities. However, as AI systems become more powerful, they also become more complex and intransparent. When used irresponsibly this technology has the potential to disadvantage individuals or even society as a whole.

How can we ensure that AI has a positive impact and mitigate bias in the recruitment and HR industry?

In a recent webinar, we explored the critical importance of using AI ethically in this industry. This discussion underscores the necessity for AI technologies to respect human rights and adhere to high ethical standards, emphasizing that responsible AI is not just a regulatory necessity but a commitment to fairness, transparency, and accountability in all recruitment processes.

In this article, we explore the core themes from the webinar, unpacking why Responsible AI is an important topic in recruitment and HR, what it entails, and key questions to ask when considering Responsible AI.

Why we need Responsible AI in Recruitment

Increasing complexity

Through the decades, AI systems have become more and more complex. Having started out as simple rule-based systems in the 80s and 90s, the modern-day AI paradigm is called Machine Learning (or more specifically Deep Learning). This method leverages data and learns patterns from it. The fact that we have impressive models like GPT nowadays, is largely because it has been trained on a huge, unfathomable quantity of data, and used humongous computers to do so.

Through the decades these systems got more and more complex. That also means that, through this added complexity, we lose visibility of what is happening inside of the system.

“If a rule-based system would give an output X, it would be easy to track back through the rules why that decision was made. However, if an LLM gives a certain output, it’s nearly impossible to completely pinpoint where that decision came from.”

This added complexity and reduced interpretability are important reasons why Responsible AI is gaining traction these days.

Increasing impact

As AI systems are becoming more and more ubiquitous, they start to affect people’s lives more and more, in all different kinds of domains. When these AI systems fail to perform well, this will disadvantage certain people and affect their lives negatively.

“Within the HR domain, a sensible generalization is that people with good communication skills might do well in a sales role. But these generalizations can also extend to unwanted relations. For example, making distinctions between male and female candidates, or discriminating by ethnicity.”

What Responsible AI means in Recruitment

“AI should benefit society as a whole. Not just the service provider, the agency, the candidate. It can and should benefit all stakeholders involved. That is the core principle of responsible AI.”

Responsible AI is crucial in recruitment to avoid perpetuating existing biases or creating new ones. AI systems, by nature, generalize from the data they learn from. This can inadvertently lead to discrimination if not carefully monitored and controlled. Ensuring AI systems in HR are responsible means actively preventing these risks to foster an equitable job market. This involves designing AI tools that enhance decision-making without replacing human judgment, ensuring that AI supports rather than dictates recruitment outcomes.

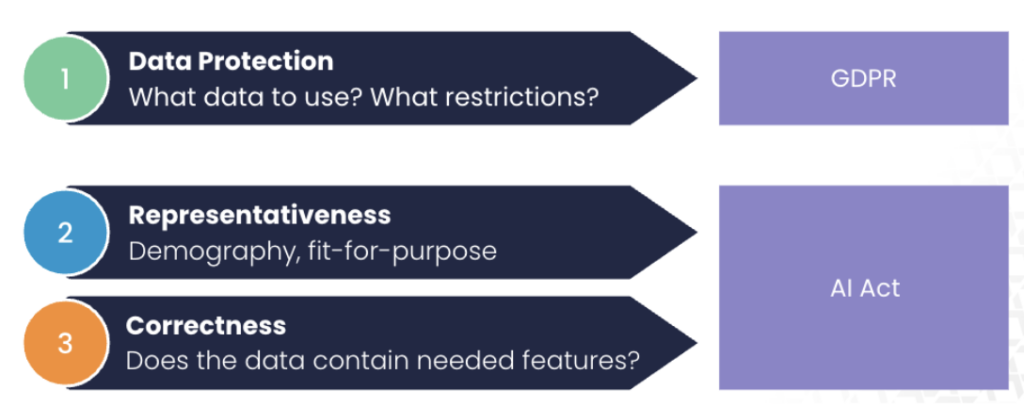

Addressing AI Risks

To mitigate these risks, organizations must implement AI systems that prioritize fairness and transparency. This involves rigorous checks on the data used for training AI, ensuring it does not perpetuate existing societal biases and adhering to strict regulatory standards like the GDPR and the forthcoming EU AI Act. It is also crucial to continually audit and refine AI models to ensure they remain aligned with ethical guidelines and perform as intended without unintended consequences.

Ethical AI Design includes the following principles:

- Fairness: AI should treat everyone fairly and unbiased. In HR, fairness and not discriminating is a vital property. All candidates should be treated equally and only be judged by their merits.

- Transparency: AI, has to to some degree, be explainable and interpretable. For example, if an AI suggests a candidate for a job, it should be clear to the user why that candidate is a suitable one.

- Human-Centered: AI should not be designed to take over the entire process from start to finish. It should be designed to aid humans in their day-to-day tasks.

Three Questions about Responsible AI in Practice

A practical approach to implementing Responsible AI involves asking three pivotal questions:

- What training data is used? Every machine learning system starts with data. When using AI responsibly, the data used needs to adhere to certain standards.

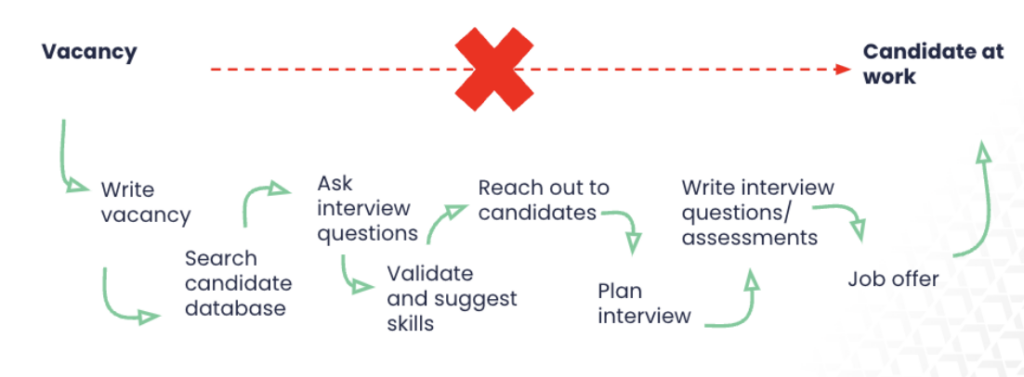

- What recruitment processes do you automate? The scope (or reach) of a single AI system for a large part determines the risk that comes with it. An AI model that does an end-to-end candidate-job match has a much higher risk associated with it, than an AI model that performs one of the subtasks of this process.

- You lose a lot of transparency if you do not get intermediate outputs. As a user, you cannot check any of the model’s reasoning or truthfulness.

- In the split-task approach, we have the ability to intervene during the process. If one step of the pipeline produces wrong results, we can easily identify and measure it and correct it if necessary.

- The user might be able to perform equally well or better with subtasks that actually use simpler, and therefore more transparent, AI models.

- How does the user keep control? LLMs do offer very powerful capabilities. At Textkernel, we believe they are the future, and they can help improve our processes when implemented with care.

Here is one of the various examples of where LLMs come into play in Textkernel products.

In this example, LLMs allow us to type a Google-like free-text search query. The LLM interprets the input, and builds the appropriate Textkernel search query, making our products even easier to use, especially for users who are new to the system.

This way we reap the benefits of LLMs, without compromising on transparency and fairness.

Integrating Responsible AI in Recruitment

The integration of AI into HR practices offers tremendous potential but also presents significant ethical challenges. Organizations must not only understand these challenges but actively engage in developing AI systems that are transparent, fair, and accountable. Committing to ethical AI practices will build trust in these technologies, ensuring they serve as valuable tools in recruitment and human resources landscapes.

To gain a deeper understanding of Responsible AI in recruitment, watch the on-demand webinar, “What you didn’t know about Responsible AI.”

Textkernel has been at the forefront of AI for over 20 years. We are proud members and contributors to the Royal Netherlands Standardization Institute, specifically the Standardization Committee for AI & Big Data, we help shape the standards that define how organizations should implement the AI Act in practice. Learn more about Responsible AI.

Author: Vincent Slot

Team Lead R&D

Vincent is spearheading the technical aspects of the Responsible AI efforts within Textkernel. He is a member of the AI & Big Data committee of the Royal Netherlands Standardization Institute, where he contributes to the creation of European standards for implementing the EU AI Act for organizations.

Read more on AI:

- Textkernel passed NYC AI law audit, believes legislation is key

- Skills-based matching with AI: Opportunities and pitfalls