As AI continues to reshape the recruitment and HR sectors, the need for legislation to ensure ethical and fair practices has never been more critical. Globally, countries are racing to establish AI regulations, with various nations having already adopted laws, or actively finalizing theirs, and some only in the drafting stages. Among these efforts, the European Union’s EU AI Act stands out as the first comprehensive set of rules governing artificial intelligence that has come into force starting August 1st 2024

In a recent webinar, we explored the implications of AI legislation for the recruitment and HR industries, discussing how various legislation such as the EU AI ACT and NYC Automated Employment Decision Tools (AEDT) law. will impact the way AI systems are developed, deployed, and managed.

This article will dive into the key aspects of legislation and why AI legislation is necessary. We also explore the critical aspects of the EU AI Act and what it means for your organization.

Why we need AI legislation:

Without clear regulations, the deployment of AI systems can lead to inconsistent practices, diminished consumer trust, and unfair competition. Here’s why AI legislation is essential:

- Inconsistent Practices: Currently, the adoption of Responsible AI practices is largely voluntary, which allows room for ethical breaches. Without mandatory regulations, organizations may implement AI in ways that lack transparency and fairness, leading to inconsistent and potentially harmful outcomes.

- Consumer Trust: AI systems that are unregulated or poorly governed can lead to public distrust. When AI is seen as unreliable or biased, it can damage the reputation of the companies that use it and hinder broader adoption of AI technologies.

- Unfair Competition: In a landscape without regulation, companies that cut corners by ignoring ethical AI practices may gain an unfair advantage over those that strive to implement AI responsibly. This creates an uneven playing field, ultimately disadvantaging ethical businesses.

Overview of the EU AI Act

As the first comprehensive set of rules dedicated to governing the use of artificial intelligence, the EU AI Act is one of the first acts to come into force regulating the use of AI . It’s designed to protect fundamental rights and ensure that AI systems used across various industries, including recruitment and HR, are both safe and trustworthy. The Act seeks to balance innovation with ethical responsibility, providing a structured framework that guides the development and deployment of AI technologies in a way that benefits society.

Since its inception in 2021, the EU AI Act has undergone extensive deliberation and refinement. Now, it finally has become an official law, The Act has a 36-month staggered transition period. Most of its provisions will become enforceable 24 months after the Act’s effective date.

Although this timeline might seem distant, organizations should not delay their preparations. The EU AI Act imposes a comprehensive set of obligations that require careful planning and implementation. Starting early is essential to ensure compliance and avoid the significant penalties associated with non-compliance.

EU AI Act Classification System for Recruitment and HR

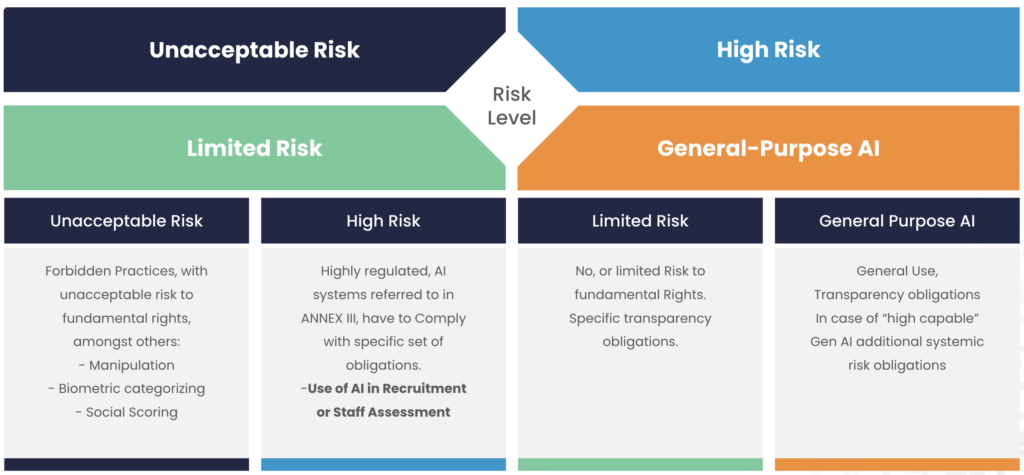

One of the most crucial aspects of the EU AI Act is its risk-based classification system, which categorizes AI applications based on their potential impact on individuals and society. This classification determines the level of regulatory oversight required, with particular relevance to the recruitment and HR sectors. The system introduces four distinct categories:

1. Unacceptable Risk:

AI practices that fall under this category are outright prohibited due to their potential to harm individuals or society. Examples include AI systems that manipulate users through subliminal techniques, exploit vulnerable groups, or employ biometric categorization for social scoring. The sections of the EU AI Act regarding these practices will be fully in force 6 months after the act comes into force, so February 1st 2025.

2. High Risk:

The majority of the EU AI Act is focused on high-risk AI systems and will be fully in force 24 months after the act comes into force, so per August 1st 2026. High risk applications are applications that, while permissible, pose significant risks to fundamental rights and therefore require strict regulation. In the context of recruitment and HR, AI systems that evaluate job applicants or make decisions affecting career progression are classified as high-risk. This means they must adhere to rigorous standards for transparency, fairness, and accountability. Although some exceptions may apply, organizations must exercise caution when claiming that an AI system does not fall into this category.

3. Limited Risk:

AI systems in this category pose lower risks to fundamental rights but are still subject to specific transparency obligations. For instance, AI-powered systems for generating images or chatbots must clearly inform users that they are interacting with an AI system or that the content has been generated by AI.

4. General Purpose AI Systems:

This category, added in the latest iteration of the Act, applies to AI systems intended for broad or general use. These systems are primarily subject to transparency obligations regarding their training data and underlying models. This ensures that any specific AI application built on top of a general-purpose system can meet the higher regulatory requirements if classified as high-risk.

For recruitment and HR professionals, understanding these classifications is essential for navigating the regulatory landscape. High-risk applications, such as those involving personal data or decisions that could significantly impact someone’s career, must be carefully managed to ensure compliance and prevent bias or potential misuse.

EU AI Act Conformity Assessment

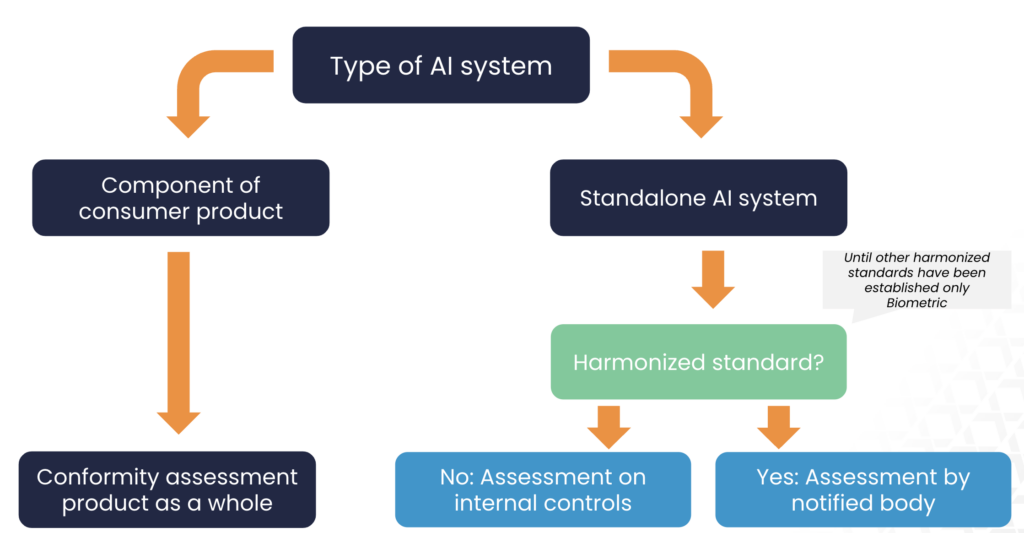

Before a high-risk AI system can be placed on the market by a provider or deployer, the EU AI Act mandates that a conformity assessment must be conducted. This assessment is critical to ensuring that AI systems meet the stringent standards set by the legislation, particularly in sectors like recruitment and HR, where the potential impact on individuals is significant.

There are different ways such conformity assessments can be conducted:

- Component of a Consumer Product:

If the AI system is part of a broader consumer product, it is included in the conformity assessment of the product as a whole. This ensures that the AI component is evaluated alongside the entire product to ensure it meets safety and regulatory standards.

- Standalone AI System:

For standalone AI systems, the conformity assessment can be conducted through one of two methods:

- Internal Controls: If no harmonized standards are applicable, the organization may carry out the assessment based on internal controls. This method requires the organization to demonstrate that the AI system complies with the necessary requirements through documented internal processes.

- Assessment by a Notified Body: If the AI system falls under a category with applicable harmonized standards—such as the use of biometrics—the conformity assessment must be conducted by an external, independent notified body. This approach ensures an additional layer of scrutiny for systems with significant potential impact.

Currently, harmonized standards are primarily developed for biometric applications. Until more standards are established, most conformity assessments will rely on internal controls. However, as the regulatory landscape evolves, it’s essential for organizations to stay informed and prepared for the potential need for external assessments.

AI Act Enforcement and fines

To ensure compliance with the EU AI Act, enforcement will be managed by national supervisory authorities, coordinated by the EU AI Office. The Act imposes significant fines for non-compliance:

- Use of prohibited AI: Up to €35 million, or 7% of global revenue.

- Other AI violations: Up to €15 million, or 3% of global revenue.

- Misinformation of risk status: Up to €7.5 million, or 1% of global revenue.

These penalties apply to all entities in the AI value chain, making it crucial for organizations to ensure their AI systems comply with the Act’s requirements. Starting preparations now is essential to avoid these substantial fines.

Integrating Responsible AI in Recruitment

Globally, countries are developing regulations to address AI’s impact, with the EU AI Act leading the way as the first comprehensive set of rules.

For organizations in recruitment and HR, aligning with these new standards, particularly those in the EU AI Act, is crucial. This legislation introduces strict requirements for high-risk AI applications, making it imperative to prepare now to ensure compliance.

At Textkernel, we are dedicated to championing ethical AI. As active contributors to the Royal Netherlands Standardization Institute, we help shape the standards guiding AI in HR. Additionally, our successful bias audit under New York’s AEDT law underscores our commitment to fairness and transparency. Complying with the EU AI Act and reducing bias are essential steps in building trust in AI. Learn more about Responsible AI.

With over 20 years of experience, we’ve been a global leader in AI-powered recruitment and talent management solutions, helping over 2,500 organizations worldwide work smarter and innovate faster. Our technologies prioritize transparency, diversity, and data security, ensuring that AI serves as a tool to empower human decision-making. To learn more about our AI-powered solutions and how we can help your organization stay compliant and innovative, contact us today.

If you’re looking to gain a deeper understanding of Responsible AI in recruitment, watch the on-demand webinar, “What you didn’t know about Responsible AI.”

Author: Jaap Kersten

International Legal Counsel

Read more on AI:

- Textkernel passed NYC AI law audit, believes legislation is key

- Skills-based matching with AI: Opportunities and pitfalls